For additional assistance, please contact support@metavilabs.com.

About FastTrack AI

FastTrack AI describes MetaVi Labs automated analysis system based on our Artificial Intelligence Engine.

Contents

What Is AI? Benefits of FastTrack AI Neural Network Architecture 2015: A Pivotal Year for Machine Vision The Training Process The Computational Cost External References

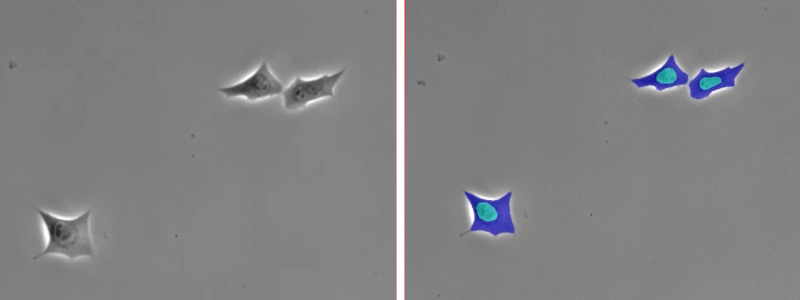

The movie below is an example of how AI can be used to find specific features within a cell. On the top are the input images (phase contrast). On the bottom are the outputs of the FastTrack AI engine. The engine was trained to find cell nuclei and also cell bodies excluding nuclei. Different colors are painted by the AI engine to indicate its prediction results (light blue indicates a prediction of nuclei, dark blue indicates a prediction of cell body).

What Is AI?

Artificial Intelligence (AI) is a broad term that refers to mathematical and software models that allow computers to learn patterns and then find the patterns they have learned. Machine Learning or Deep Learning are other terms which are roughly synonymous. AI applies to many different types of problems and machine vision is just one sub-set.

MetaVi Labs specializes in vision and microscopy analysis so we focus on the machine vision category of AI. Fundamentally, we teach computers what cells, or other structures, look like, then the computer finds them for us.

The key concerns in AI system for vision are:

- the neural network architecture

- the training process

- the pre and post processing required to capture useful and usable data

- computational cost

Benefits of FastTrack AI

While several automated tracking software packages are on the market, most require careful adjustments to image processing parameters such as thresholding and contrast. These software solutions often rely on techniques developed in the early days of image analysis for object classification. These crude methods are error prone and require user intervention. Modern methods use complex filters which recognize shapes much more like the human vision system.

Some important advantages of the FastTrack AI

- Highest accuracy of cell identification on a wide array of cell morphologies

- Tracks very high numbers of cells (up to 1000 per image) for much higher relevance of results

- Saves hundreds of hours of labor over manual analysis

- Improved accuracy over human tracking

- Works on Phase contrast with no labels or dyes required

- Complete end to end solution for full automation for high content screening applications

- Very simple drag-and-drop interface for maximum ease-of-use

- Very comprehensive reports with easy to read condition comparison charts and also raw-data

Neural Network Architecture

MetaVi Labs uses a form of deep residual convolutional neural networks (ResNet). The name of this machine learning model comes from the fact that it is modeled after our limited understanding of vision systems in nature. Object recognition in the brain is a complex multi-stage, multi-step process. Signals flow through layers where each layer appears to have a specialized task in the process of converting signals from light sensitive neurons into labeled objects.

By labeled objects we mean to say names or concepts of what an object is. For example, I see a "dog" chasing a "cat". Dog and cat in this example are labels. Likewise, MetaVi Labs teach our ResNet what objects look like using labels. For example, we use labels for objects such as "cells" or "apoptotic bodies", or "cell nuclei", etc. There will be more on the training process below, but essentially it is associating pixel patterns with labels.

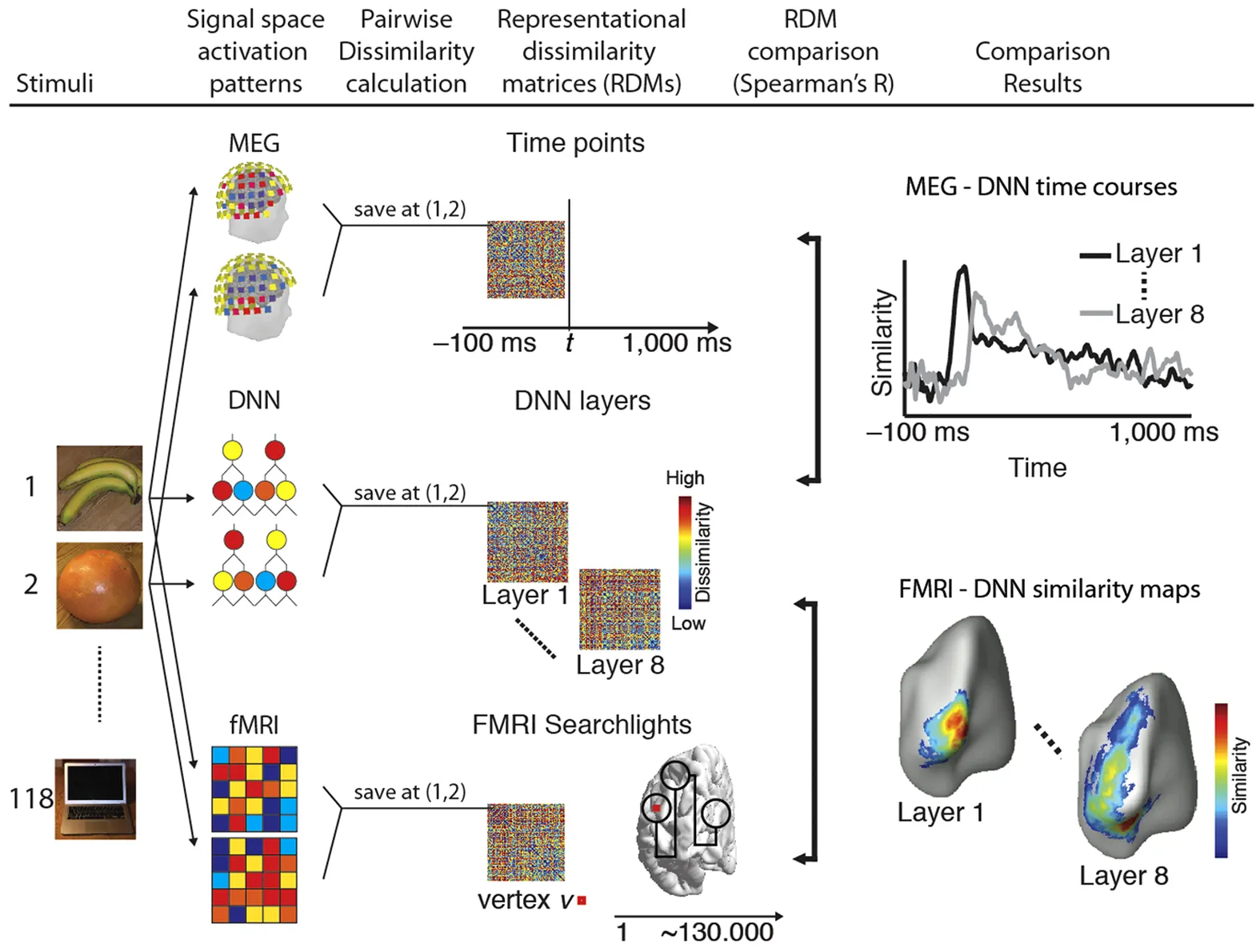

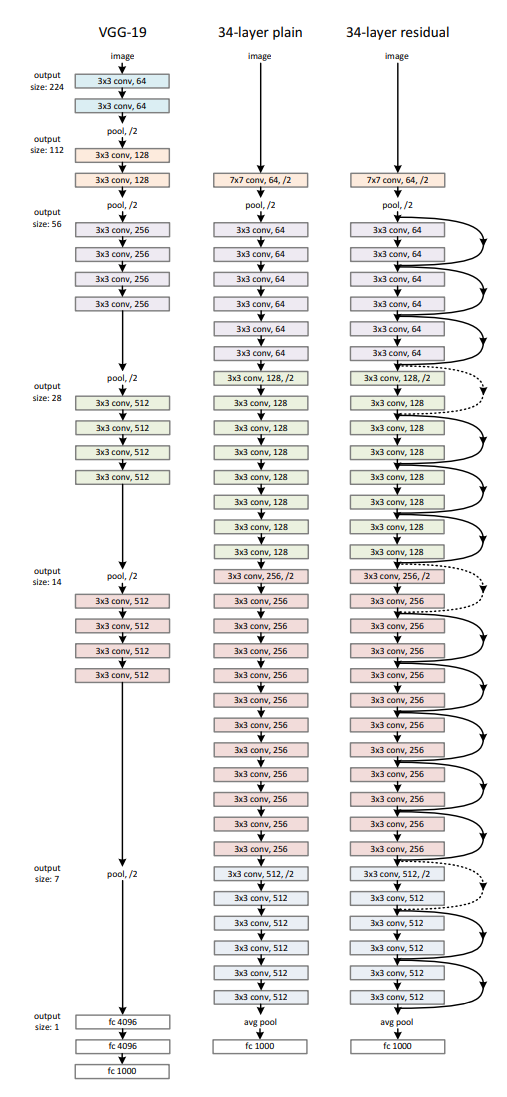

While the animal vision system is complex and our understanding is vague, a recent article in Nature (Cichy, 2016) shows a remarkable similarity in deep neural networks and human vision: Comparison of deep neural networks to spatio-temporal cortical dynamics of human visual object recognition reveals hierarchical correspondence.

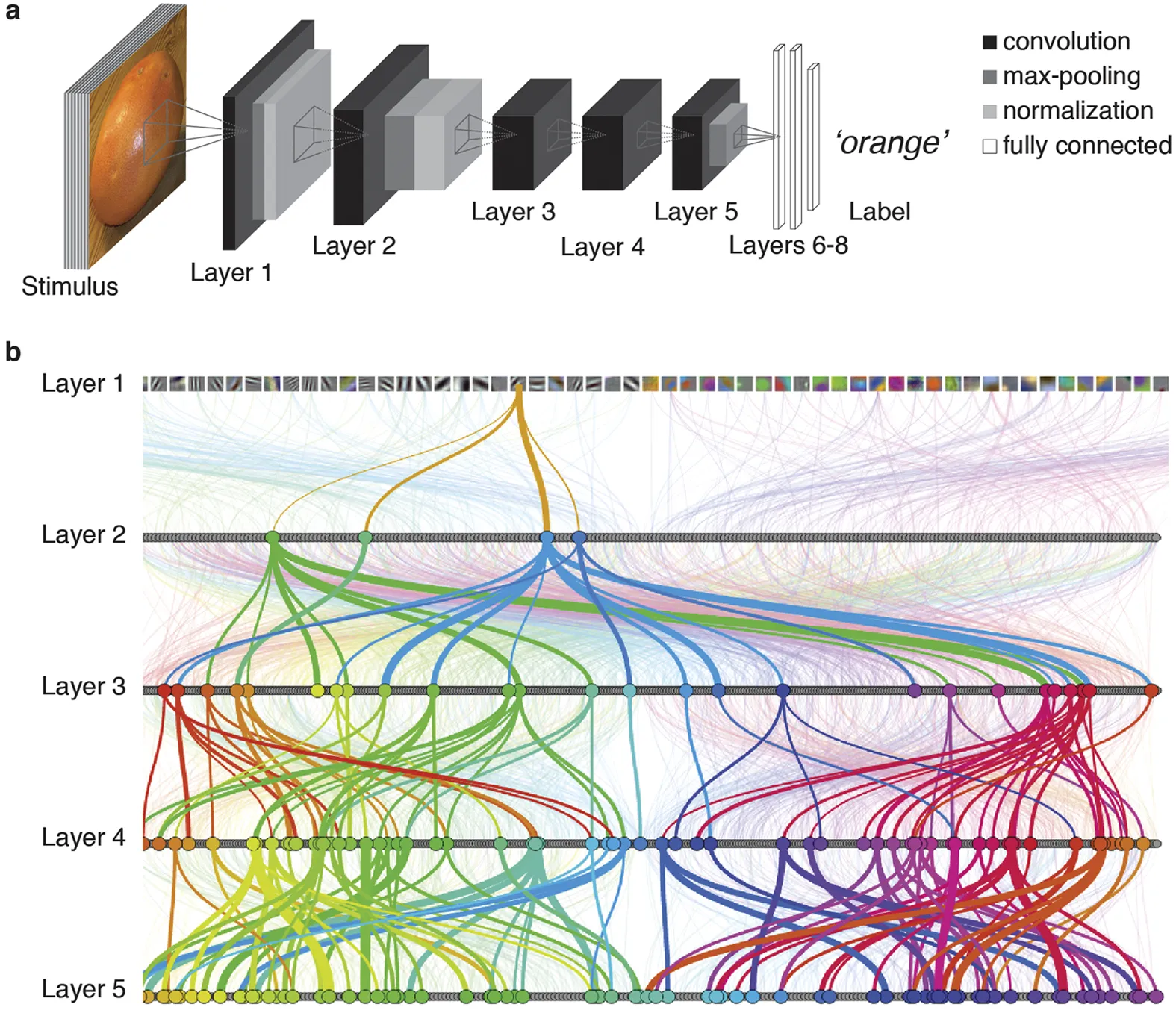

The image below, from the Nature (Cichy, 2016) article, depicts the typical deep learning network (but the residual return paths are not shown).

The term 'deep' comes from the multiple layers. While only 5 layers are depicted, it is typical to use

50 to 150 connected layers in a deep network.

2015: A Pivotal Year for Machine Vision

There are two major international annual competitions for visual recognition:

- Imagenet - Large Scale Visual Recognition Challenge (ILSVRC)

- Microsoft COCO: Common Objects in Context

In the year 2015, a network design by a team from Microsoft beat all challengers in ILSVRC2015 and COCO-2015. This design is captured in a paper that now has over 34,000 citations (He, 2015) and became known as ResNet. Their design had 152 layers. This approach was so powerful that it revolutionized AI for vision applications.

The MetaVi Labs FastTrack AI implementation is based on this same design. The image below from (He, 2015) is a graphical representation of the 152 residual network design.

The Training Process

Training means teaching the ResNet what objects are by associating pixels with labels. For example, we can produce a set of images of many cells and label all of them as "cell". And we can provide images of apoptotic bodies and label them as "apoptotic bodies". But in reality the process is not so simple. We must also teach the computer model what pixels are not cells and not other objects we are training on.

One of the key challenges in images of living and moving cells is that the cells are constantly morphing in shape as they locomote, divide, and die. Additional challenges are the wide variety of imaging modes, exposures, fields-of-view, and overall image quality in the microscopy images.

MetaVi Labs trainer's are individuals who have viewed thousands of hours of cell images and movies such that they have become experts in what the target objects look like. Then the experts are able to create specialized training images (with the aid of software) that cab be used to train the network. So human experts train computers; human knowledge is transfered to the computer.

In the sample image below (also see the sample movie at the top of this page), the trainers created a few hundred examples of what cell nuclei are in the phase contrast image, and also labeled cell bodies excluding nuclei. They also indicated what are not cell nuclei and what are not cell bodies. Once trained, the FastTrack AI engine was able to predict what pixels are nuclei and what pixels are cell bodies (indicated with the difference shades of blue). The sample movie at the top of this article is an example of a fully predicted time sequence based on a training set of a few frames.

The pre and post processing required to capture useful and usable data

To make AI useful a complete system must be in place to capture user's images from microscopes, prepare them for AI object classification, and then convert AI classification predictions into objects that can be counted and tracked. For example in Chemotaxis additional processing is requires to find x,y,z cell centers, connect new centers to previous cell tracks, and then to calculate useful indicators of directed cell movement.

Additionally, a database is required to organize and track the massive amount of data that can be generated in a 96 well plate, for example. A presentation layer is required to present the information in a manageable fashion to the investigators.

The MetaVi Labs FastTrack AI system is a complete end-to-end automation system that supports high content screening applications. The system interfaces with popular living cell high content screening systems (such as the Incucyte) and automates the entire processing pipeline.

The Computational Cost

AI has enormous computational complexity. Only recently with the advent of 12nm silicon processes has it been possible to build AI machines that can keep pace with the human vision system.

As an example, consider the Lamba MAX Machine Learning workstation. It boasts:

- 4x Quadro RTX 8000 Nvida GPUS providing 490 Tera-Flops of TensorFlow performance

- Intel W-2195 CPU with 18 Cores

- 256 GB Memory

- 2 TeraBytes SSD with 3,500 MB/sec read rate

- List Price of $29,000 USD

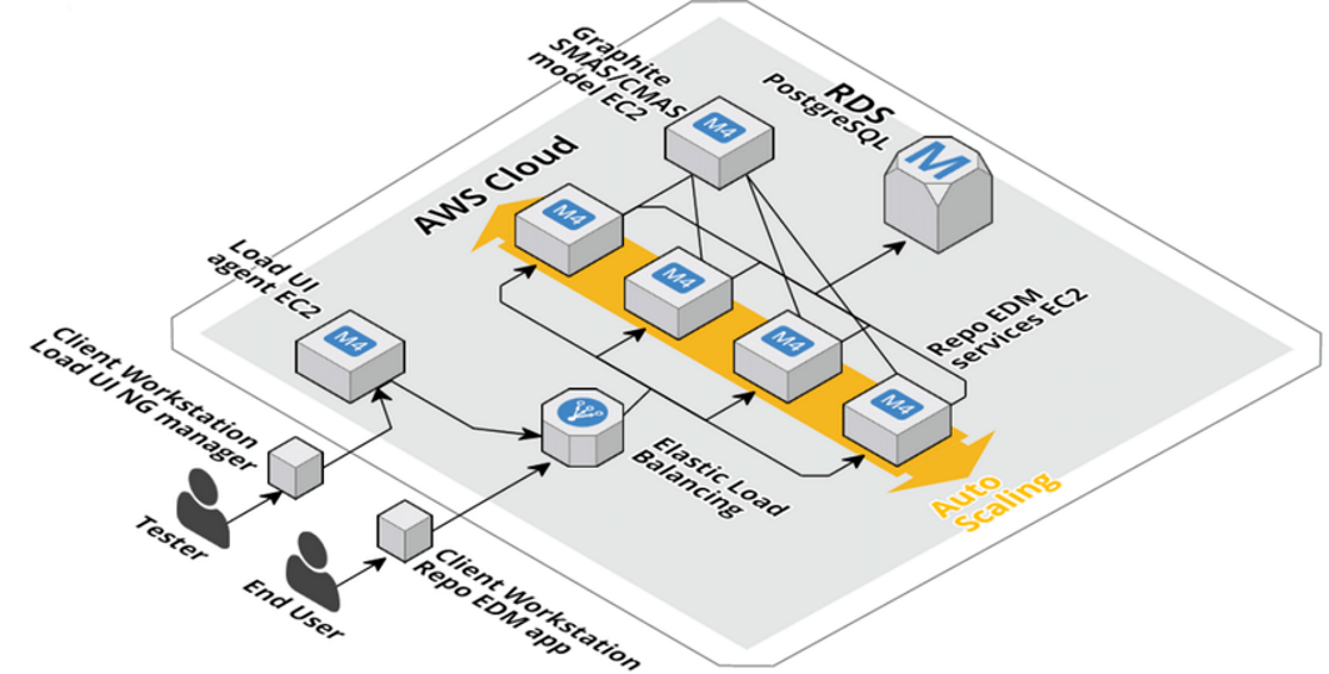

To allocate one of these machines for each experimenter would be prohibitive in costs. So an alternative is to rent space on a cloud hosted virtual network, such as Amazon Web Services.

Cloud service providers, such as Amazon, Microsoft, Google, et al., provide elastic computation networks. These are vast networks of virtual machine fabrics. A virtual machine fabric is an array of specialized computational blocks where each block is design to load and run complete operating systems on an as-needed basis.

For example, as the FastTrack AI platform detects well recordings have been uploaded for analysis, it asks Amazon Web Services Elastic Compute Cloud 2 (EC2) to launch an instance of our analysis platform. At this time, EC2 finds on its network an available virtual computer slice and copies the entire C:\ drive that has been stored on its S3 storage platform into this computer slice and starts it up. Once running the analyzer processes all the well images and generates reports. When completed it shuts down. EC2 then wipes the disk drive of the computer slice and shuts it down. This all happens within a few minutes by using solid state disk drives and memory based drive technology.

The MetaVi Labs FastTrack AI platform can launch hundreds of these virtual computer instances as needed and then shut them down when not in use. This allows FastTrack AI to scale up to thousands of wells of analysis per day. Sharing resources in this way also dramatically reduces costs, cost savings that can be passed on to the end user.